ARTIFICIAL INTELLIGENCE

The idea of authenticity has always rested on trust. We once believed in the legitimacy of the handwritten letter because we could feel the marks that the press of the pen made and the uneven strokes, which revealed the hand behind the words.

Today, that confidence is no longer automatic because AI can compose fluent essays, generate photorealistic images and create videos that depict people saying things they never said.

TRUST IN AI IS BEING TESTED!

Fazmina Imamudeen explores the importance of authenticity in a complex era

The problem of what to believe or not has become part of everyday life.

A 2023 survey by the Pew Research Center found that 52 percent of Americans are more concerned than excited about the expanding role of artificial intelligence in daily life.

Concerns about authenticity lie at the heart of that anxiety: when even casual social media users need to second-guess whether an image or a quote is genuine, a fundamental layer of trust in the digital sphere begins to erode.

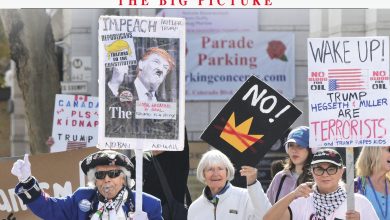

Ahead of elections in some countries recently, digitally manipulated clips appeared online showing candidates making statements they had never made. The BBC reported that these deepfake fabrications spread quickly with real potential to sway public opinion.

The question isn’t simply whether we can spot a fake but rather, if we can believe what we see and hear anymore.

Generative systems such as Midjourney and Stable Diffusion are trained on millions of existing images, many of which are extracted from artists’ portfolios without their permission. Lawsuits filed in the US argue that this practice amounts to copying styles without consent.

For artistes who spend years developing a unique voice, the arrival of an algorithm that can reproduce the same sound in a matter of seconds is not only an economic threat but an assault on the very notion of authenticity.

In the recent past, social media was flooded with Ghibli style AI images and filters. But when cofounder of Studio Ghibli Hayao Miyazaki was shown an artificial intelligence animation experiment, his reaction was sharp. He called it “an insult to life itself” and stressed that creation without an understanding of pain or compassion produces only hollow imitation.

Authenticity can no longer simply be tied to a product since machines can now produce convincing facsimiles. Instead, it must be judged through process and intention.

A photograph altered with AI tools may still be authentic if the artist is open about his or her methods and accepts responsibility for the result. Political speeches partly drafted with the help of language software can remain authentic if the speakers embrace the words as their own. What matters isn’t purity free of technology but honesty about its role.

This is also why imperfection has become valuable.

Human work often carries slips and hesitations – signs of the struggle behind a creation. Those marks, once treated as flaws, are now clues that a human is the creator. Against a background of machine generated smoothness, they’re increasingly cherished as evidence of what’s real.

Many media houses are now operating divisions to investigate AI generated images and videos. Tech firms are experimenting with watermarking and provenance tools, to show how and when content was created. This matters because without shared standards of verification, the line between authentic and artificial may blur beyond repair.

The truth is that authenticity in the AI era is a responsibility. Creators must disclose the role of technology in their work to audiences who must cultivate scepticism, and platforms must enforce transparency.

Machines can generate content but they have no emotions. To be authentic is to act from a human place of responsibility, vulnerability and lived experience. That’s what deserves credibility – and it is what no algorithm can replace.